This is a guest post by

Anthony Beck, Honorary fellow, and Dave Harrison, Research fellow, at the University of Leeds School of Computing

Data repository as research tool

In a previous post, we examined why Open Science is necessary to take advantage of the huge corpus of data generated by modern science. In our project Detection of Archaeological residues using Remote sensing Techniques, or DART, we adopted Open Science principles and made all the project’s extensive data available through a purpose-built data repository built on the open-source CKAN platform. But with so many academic repositories, why did we need to roll our own? A final post will look at how the portal was implemented.

DART: data-driven archaeology

DART’s overall aim is to develop analytical methods to differentiate archaeological sediments from non-archaeological strata, on the basis of remotely detected phenomena (e.g. resistivity, apparent dielectric permittivity, crop growth, thermal properties etc). DART is a data rich project: over a 14 month period, in-situ soil moisture, soil temperature and weather data were collected at least once an hour; ground based geophysical surveys and spectro-radiometry transects were conducted at least monthly; aerial surveys collecting hyperspectral, LiDAR and traditional oblique and vertical photographs were taken throughout the year, and laboratory analyses and tests were conducted on both soil and plant samples. The data archive itself is in the order of terabytes.

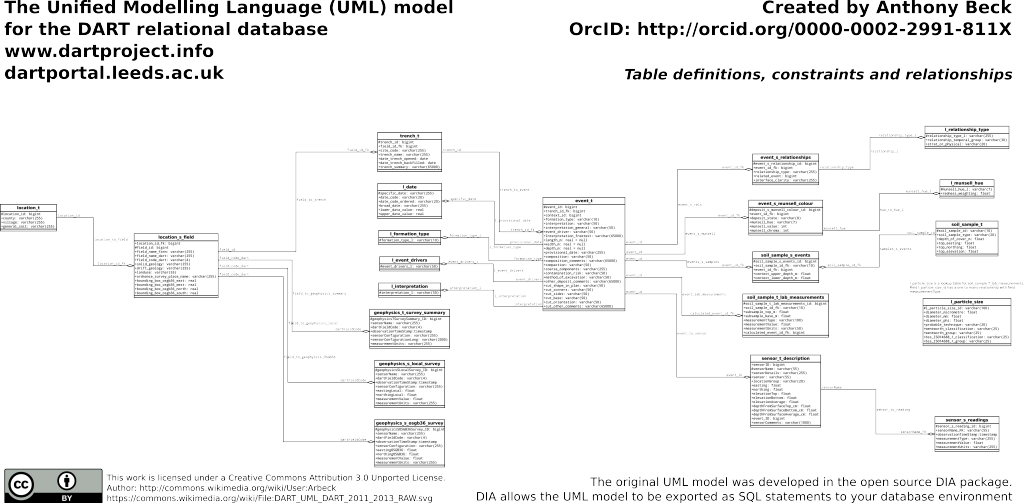

Analysis of this archive is ongoing; meanwhile, this data and other resources are made available through open access mechanisms under liberal licences and are thus accessible to a wide audience. To achieve this we used the open-source CKAN platform to build a data repository, DARTPortal, which includes a publicly queryable spatio-temporal database (on the same host), and can support access to individual data as well as mining or analysis of integrated data.

This means we can share the data analysis and transformation processes and demonstrate how we transform data into information and synthesise this information into knowledge (see, for example, this Ipython notebook which dynamically exploits the database connection). This is the essence of Open Science: exposing the data and processes that allow others to replicate and more effectively build on our science.

Lack of existing infrastructure

Pleased though we are with our data repository, it would have been nice not to have to build it! Individual research projects should not bear the burden of implementing their own data repository framework. This is much better suited to local or national institutions where the economies of scale come into their own. Yet in 2010 the provision of research data infrastructure that supported what DART did was either non-existent or poorly advertised. Where individual universities provided institutional repositories, these were focused on publications (the currency of prestige and career advancement) and not on data. Irrespective of other environments, none of the DART collaborating partners provided such a data infrastructure.

Data sharing sites like Figshare did not exist – and when it did exist the size of our hyperspectral data, in particular, was quite rightly a worry. This situation is slowly changing, but it is still far from ideal. The positions taken by Research Councils UK and the Engineering and Physical Science Research Council (EPSRC) on improving access to data are key catalysts for change. The EPSRC statement is particularly succinct:

Two of the principles are of particular importance: firstly, that publicly funded research data should generally be made as widely and freely available as possible in a timely and responsible manner; and, secondly, that the research process should not be damaged by the inappropriate release of such data.

This has produced a simple economic issue – if research institutions can not demonstrate that they can manage research data in the manner required by the funding councils then they will become ineligible to receive grant funding from that council. The impact is that the majority of universities are now developing their own, or collaborating on communal, data repositories.

But what about formal data deposition environments?

DART was generously funded through the Science and Heritage Programme supported by the UK Arts and Humanities Research Council (AHRC) and the EPSRC. This means that these research councils will pay for data archiving in the appropriate domain repository, in this case the Archaeology Data Service (ADS). So why produce our own repository?

Deposition to the ADS would only have occurred after the project had finished. With DART, the emphasis has been on re-use and collaboration rather than primarily on archiving. These goals are not mutually exclusive: the methods adopted by DART mean that we produced data that is directly suitable for archiving (well documented ASCII formats, rich supporting description and discovery metadata, etc) whilst also allowing more rapid exposure and access to the ‘full’ archive. This resulted in DART generating much richer resource discovery and description metadata than would have been the case if the data was simply deposited into the ADS.

The point of the DART repository was to produce an environment which would facilitate good data management practice and collaboration during the lifetime of the project. This is representative of a crucial shift in thinking, where projects and data collectors consider re-use, discovery, licences and metadata at a much earlier stage in the project life cycle: in effect, to create dynamic and accessible repositories that have impact across the broad stakeholder community rather than focussing solely on the academic community. The same underpinning philosophy of encouraging re-use is seen at both FigShare and DataHub. Whilst formal archiving of data is to be encouraged, if it is not re-useable, or more importantly easily re-useable, within orchestrated scientific workflow frameworks then what is the point.

In addition, it is unlikely that the ADS will take the full DART archive. It has been said that archaeological archives can produce lots of extraneous or redundant ‘stuff’. This can be exacerbated by the unfettered use of digital technologies – how many digital images are really required for the same trench? Whilst we have sympathy with this argument, there is a difference between ‘data’ and ‘pretty pictures’: as data analysts, we consider that a digital photograph is normally a data resource and rarely a pretty picture. Hence, every image has value.

This is compounded when advances in technology mean that new data can be extracted from ‘redundant’ resources. For example, Structure from Motion (SfM) is a Computer Vision technique that extracts 3D information from 2D objects. From a series of overlapping photographs, SfM techniques can be used to extract 3D point clouds and generate orthophotographs from which accurate measurements can be taken. In the case of SfM there is no such thing as redundancy, as each image becomes part of a ‘bundle’ and the statistical characteristics of the bundle determine the accuracy of the resultant model. However, one does need to be pragmatic, and it is currently impractical for organisations like the ADS to accept unconstrained archives. That said, it is an area that needs review: if a research object is important enough to have detailed metadata created about it, then it should be important enough to be archived.

For DART, this means that the ADS is hosting a subset of the archive in long-term re-use formats, which will be available in perpetuity (which formally equates to a maximum of 25 years), while the DART repository will hold the full archive in long term re-use formats until we run out of server money. We are are in discussion with Leeds University to migrate all the data objects over to the new institutional repository with sparkling new DOIs and we can transfer the metadata held in CKAN over to Open Knowledge’s public repository, the dataHub. In theory nothing should be lost.

How long is forever?

The point on perpetuity is interesting. Collins Dictionary defines perpetuity as ‘eternity’. However, the ADS defines ‘digital’ perpetuity as 25 years. This raises the question: is it more effective in the long term to deposit in ‘formal’ environments (with an intrinsic focus on preservation format over re-use), or in ‘informal’ environments (with a focus on re-use and engagement over preservation (Flickr, Wikimedia Commons, DART repository based on CKAN, etc)? Both Flickr and Wikimedia Commons have been around for over a decade. Distributed peer to peer sharing, as used in Git, produces more robust and resilient environments which are equally suited to longer term preservation. Whilst the authors appreciate that the situation is much more nuanced, particularly with the introduction of platforms that facilitate collaborative workflow development, this does have an impact on long-term deployment.

Choosing our licences

Licences are fundamental to the successful re-use of content. Licences describe who can use a resource, what they can do with this resource and how they should reference any resource (if at all).

Two lead organisations have developed legal frameworks for content licensing, Creative Commons (CC) and Open Data Commons (ODC). Until the release of CC version 4, published in November 2013, the CC licence did not cover data. Between them, CC and ODC licences can cover all forms of digital work.

At the top level the licences are permissive public domain licences (CC0 and PDDL respectively) that impose no restrictions on the licensees use of the resource. ‘Anything goes’ in a public domain licence: the licensee can take the resource and adapt it, translate it, transform it, improve upon it (or not!), package it, market it, sell it, etc. Constraints can be added to the top level licence by employing the following clauses:

- BY – By attribution: the licensee must attribute the source.

- SA – Share-alike: if the licensee adapts the resource, they must release the adapted resource under the same licence.

- NC – Non commercial: the licensee must not use the work within a commercial activity without prior approval. Interestingly, in many area of the world, the use of material in university lectures may be considered a commercial activity. The non-commercial restriction about the nature of the activity, not the legal status of the institution doing the work.

- ND – No derivatives: the licensee can not derive new content from the resource.

Each of these clauses decreases the ‘open-ness’ of the resource. In fact, the NC and ND clause are not intrinsically open (they restrict both who can use and what you can do with the resource). These restrictive clauses have the potential to produce license incompatibilities which may introduce profound problems in the medium to long term. This is particularly relevant to the SA clause. Share-alike means that any derived output must be licensed under the same conditions as the source content. If content is combined (or mashed up) – which is essential when one is building up a corpus of heritage resources – then content created under a SA clause can not be combined with content that includes a restrictive clause (BY, NC or ND) that is not in the source licence. This licence incompatibility has a significant impact on the nature of the data commons. It has the potential to fragment the data landscape creating pockets of knowledge which are rarely used in mainstream analysis, research or policy making. This will be further exacerbated when automated data aggregation and analysis systems become the norm. A permissive licence without clauses like Non-commercial, Share-alike or No-derivatives removes such licence and downstream re-user fragmentation issues.

For completeness, specific licences have been created for Open Government Data. The UK Government Data Licence for public sector information is essentially an open licence with a BY attribution clause.

At DART we have followed the guidelines of The Open Data Institute and separated out creative content (illustrations, text, etc.) from data content. Hence, the DART content is either CC-BY or ODC-BY respectively. In the future we believe it would be useful to drop the BY (attribution) clause. This would stop attribute stacking (if the resource you are using is a derivative of a derivative of a derivative of a ….. (you get the picture), at what stage do you stop attribution) and anything which requires bureaucracy, such as attributing an image in a powerpoint presentation, inhibits re-use (one should always assume that people are intrinsically lazy). There is a post advocating ccZero+ by Dan Cohen. However, impact tracking may mean that the BY clause becomes a default for academic deposition.

The ADS uses a more restrictive bespoke default licence which does not map to national or international licence schemes (they also don’t recognise non CC licences). Resources under this licence can only be used for teaching, learning, and research purposes. Of particular concern is their use of the NC clause and possible use of the ND clause (depending on how you interpret the licence). Interestingly, policy changes mean that the use of data under the bespoke ADS licence becomes problematic if university teaching activities are determined to be commercial. It is arguable that the payment of tuition fees represents a commercial activity. If this is true then resources released under the ADS licence can not be used within university teaching which is part of a commercial activity. Hence, the policy change in student tuition and university funding has an impact on the commercial nature of university teaching which has a subsequent impact on what data or resources universities are licensed to use. Whilst it may never have been the intention of the ADS to produce a licence with this potential paradox, it is a problem when bespoke licences are developed, even if they were originally perceived to be relatively permissive licences. To remove this ambiguity it is recommended that submissions to the ADS are provided under a CC licence which renders the bespoke ADS licence void.

In the case of DART, these licence variations with the ADS should not be a problem. Our licences are permissive (by attribution is the only clause we have included). This means the ADS can do anything they want with our resources as long as they cite the source. In our case this would be the individual resource objects or collections on the DART portal. This is a good thing, as the metadata on the DART portal is much richer than the metadata held by the ADS.

Concerns about opening up data, and responses which have proved effective

Christopher Gutteridge (University of Southampton) and Alexander Dutton (University of Oxford) have collated a Google doc entitled ‘Concerns about opening up data, and responses which have proved effective‘. This document describes a number of concerns commonly raised by academic colleagues about increasing access to data. For DART two issues became problematic that were not covered by this document:

- The relationship between open data and research novelty and the impact this may have on a PhD submission.

- Journal publication – specifically that a journal won’t publish a research paper if the underlying data is open.

The former point is interesting – does the process of undertaking open science, or at least providing open data, undermine the novelty of the resultant scientific process? With open science it could be difficult to directly attribute the contribution, or novelty, of a single PhD student to an openly collaborative research process. However, that said, if online versioning tools like Git are used, then it is clear who has contributed what to a piece of code or a workflow (the benefits of the BY clause). This argument is less solid when we are talking solely about open data. Whilst it is true that other researchers (or anybody else for that matter) have access to the data, it is highly unlikely that multiple researchers will use the same data to answer exactly the same question. If they do ask the same question (and making the optimistic assumption that they reach the same conclusion), it is still highly unlikely that they will have done so by the same methods; and even if they do, their implementations will be different. If multiple methods using the same source data reach the same conclusion then there is an increased likelihood that the conclusion is correct and that the science is even more certain. The underlying point here is that 21st-century scientific practice will substantially benefit from people showing their working. Exposure of the actual process of scientific enquiry (the algorithms, code, etc.) will make the steps between data collection and publication more transparent, reproduceable and peer-reviewable – or, quite simply, more scientific. Hence, we would argue that open data and research novelty is only a problem if plagiarism is a problem.

The journal publication point is equally interesting. Publications are the primary metric for academic career progression and kudos. In this instance it was the policy of the ‘leading journal in this field’ that they would not publish a paper from a dataset that was already published. No credible reasons were provided for this clause – which seems draconian in the extreme. It does indicate that no one size fits all approach will work in the academic landscape. It will also be interesting to see how this journal, which publishes work which is mainly funded by EPSRC, responds to the EPSRC guidelines on open data.

This is also a clear demonstration that the academic community needs to develop new metrics that are more suited to 21st century research and scholarship by directly link academic career progression to other source of impact that go beyond publications. Furthermore, academia needs some high-profile exemplars that demonstrate clearly how to deal with such change. The policy shift and ongoing debate concerning ‘Open access’ publications in the UK is changing the relationship between funders, universities, researchers, journals and the public – a similar debate needs to occur about open data and open science.

The altmetrics community is developing new metrics for “analyzing, and informing scholarship” and have described their ethos in their manifesto. The Research Councils and Governments have taken a much greater interest in the impact of publically funded research. Importantly public, social and industry impact are as important as academic impact. It is incumbent on universities to respond to this by directly linking academic career progression through to impact and by encouraging improved access to the underlying data and procesing outputs of the research process through data repositories and workflow environments.

This post is by a guest poster. If you would like to write something for the Open Knowledge Foundation blog, please see the submissions page.