In collaboration with Patricio Del Boca

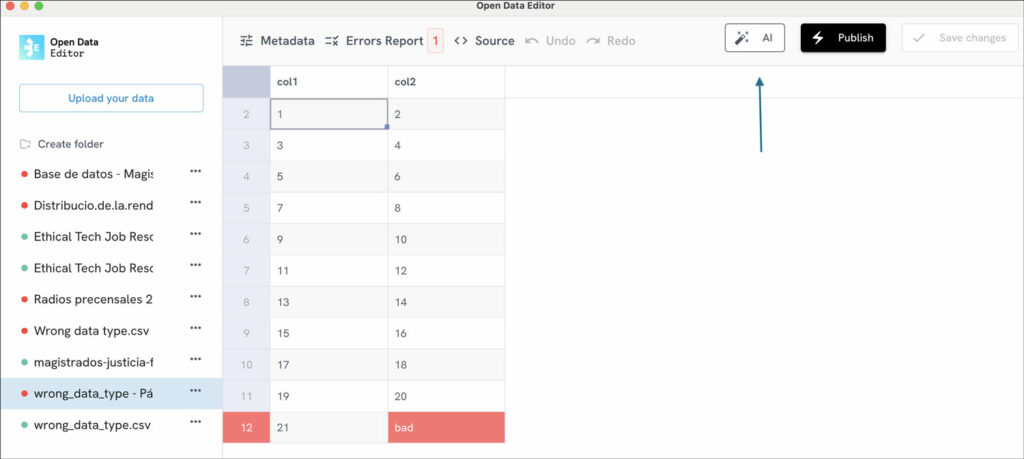

This post discusses Open Knowledge Foundation’s (OKFN) approach to integrating AI into the Open Data Editor (ODE), our new open source desktop application for data practitioners to detect errors in tables.

To ensure thoughtful AI integration, we are emphasising the importance of interdisciplinary collaboration, user feedback, and ethical considerations rather than just technical implementation. We are prioritising user needs, such as limiting data shared with OpenAI to metadata and making AI features optional, while acknowledging practical constraints like deadlines and resource limitations.

Learn more about the lessons learned during the process, including the value of asking critical questions, avoiding perfectionism, and balancing innovation with ethical and practical considerations.

Why has OKFN opted for a non-technical approach to reflect on AI?

OKFN is convinced that AI integrations cannot be approached solely from the technical side. There are conversations and reflections that teams must have both internally and with the community to ensure that such integrations are targeted to help with specific problems, and at the same time ensure that they are communicated in a clear and transparent way. These conversations not only helped us define what kind of implementation we were going to do, but also allowed us to build internal capacity and new soft skills to be able to answer key questions or be aware of possible red flags when working with AI.

Limitations and general comments on AI reflections

As in any project, our work had certain external and internal deadlines and constraints. On the one hand, we had a limited period of time for implementation. Initially, we defined a workflow of two months, four work calls and asynchronous revision of a document with guidelines and ideas. However, as we progressed with the reflections, we called Madelon Hulsebos (our AI Consultant) to resolve doubts and validate the integration angle. It should be noted that the first general conversation was not only between Madelon and Romina Colman (Product Owner). The meeting was also attended by part of the project’s technical team and our UX designer. Why? Not only to address the integration from all angles, but also to de-centre the idea that working with AI is something that is solved at the code level and avoid the current disconnect seen in the ecosystem where there are conversations between technical people on the one hand and academia, activists and NGOs on the other.

This is what we learned:

- Create an interdisciplinary team to reflect on artificial intelligence integrations: While incorporating AI into products may be easy with a skilled technical person, it is important not to limit its implementation to the realm of code and to open up the space for conversations where multiple voices can be heard. For example, in some of our early team talks we talked about possible integrations where the conclusion was ‘But we don’t need AI to do that’.

- Ask yourselves many questions: it is important not to take anything for granted, to ask questions all the time throughout the process. For example, in the case of the ODE these were some of the questions we asked ourselves: ‘How do I explain to the user what is happening at this stage?‘, ‘How do I make sure they know what information we are sharing when they click on the button?‘, etc.

- Listen to your user needs and concerns: months before we started working with AI integration, in the first interviews with our potential users we asked them if they used AI for their work with data. At that time, journalists and people working in NGOs shared their concerns about its use and the importance of privacy in their work. These two elements were central to the decisions we made later, such as the idea of having a predefined prompt in the application or the idea of explaining what information ODE shares if the user decides to work with AI.

- Don’t strive for perfection: in every project there are deadlines, resource constraints and AI work is often one component of many. It is necessary to create a balance between all elements. For example: in our case, as mentioned above, the ODE team wants to start testing with open source LLMs. When we started talking about it, we realised that we couldn’t do it in the time we had and so we started asking ourselves how to improve and narrow down the integration (bearing in mind that we were using OpenAI) and then move on to other models.

The ODE uses OpenAI as a third party for the AI integration. Will we keep using it?

The current AI integration of ODE uses OpenAI. This decision was a practical one: it was easy to implement and it is the current state-of-the art when integrating LLMs to applications.

The quick integration of Open AI provided useful feedback:

- We were able to quickly test integrations and uses of LLMs into the ODE to start exploring capabilities and limits of the technology.

- User research that we conducted in March 2024 showed that journalists (potential users of the ODE) and other data practitioners expressed concerns on using the ODE if the app shared the full content of their files.

Based on the feedback we made two decisions:

- Limit the data we share with Open AI to only the metadata of the file.

- Make the use of Open AI optional (nothing is shared by default)

In 2025, we are working on:

- Keep exploring other uses cases where LLMs can be useful

- Work towards the integration of local LLMs, this is, to make ODE work with LLMs running in the users machine (instead of a third party provider)