The following is a post by Rufus Pollock, co-Founder of the Open Knowledge Foundation.

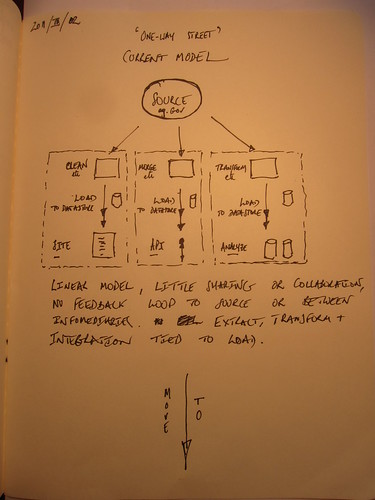

The Present: A One-Way Street

At the current time, the basic model for data processing is a “one way street”. Sources of data, such as government, publish data out into the world, where, (if we are lucky) it is processed by intermediaries such as app creators or analysts, before finally being consumed by end users1.

It is a one way street because there is no feedback loop, no sharing of data back to publishers and no sharing between intermediaries.

So what should be different?

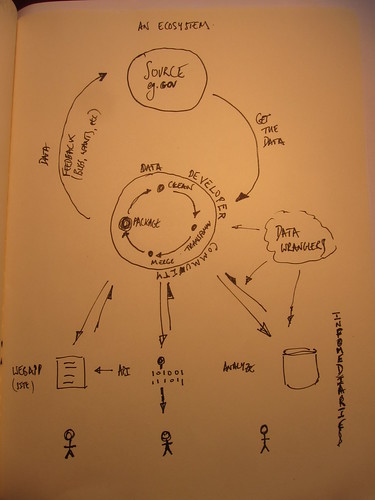

The Future: An Ecosystem

What we should have is an ecosystem. In an ecosystem there are data cycles: infomediaries — intermediate consumers of data such as builders of apps and data wranglers — should also be publishers who share back their cleaned / integrated / packaged data into the ecosystem in a reusable way — these cleaned and integrated datasets being, of course, often more valuable than the original source.

In addition, corrected data, or relevant “patches” should find their way back to data producers so data quality improves at the source. Finally, end users of data need not be passive consumers but should be also be able to contribute back — flagging errors, or submitting corrections themselves.

With the introduction of data cycles we have a real ecosystem not a one way street and this ecosystem thrives on collaboration, componentization and open data.

What is required to develop this ecosystem model rather than a one way street? Key changes include (suggestions welcome!):

- Infomediaries to publish what they produce (and tools to make this really easy)

- Data packaging and patching format (better ways to publish and share data)

- Publisher notification of patches (pull requests) with automated integration (merge) tools

We’re starting to see the first versions of tools that will help here2: for example Google Refine with its javascript “patch” format, Scraperwiki and our own CKAN Data Management System. And for some items, such as increased sharing and publication, evangelism and a change in attitude (to a world in which the default is to publish) may be more important than tools — though even here make better tools can make easier to publish as well as provide incentives to do so (because publishing gives you access to those tools).

But it is just a beginning and there’s still a good way to go before we’ve really made the transition from the one-way street to a proper (open) data ecosystem.

Annexe: Some Illustrations of Our Current One Way Street

Currently it’s common to hear people describe web-apps or visualizations that have been built using some particular dataset. However, it’s unusual to hear them then say “and I published the cleaned data, and the data cleaning code back to the community in a way that was reusable” and even rarer to hear them say “and the upstream provider has corrected the errors we found in the data based on our reports”. (It’s also rare to hear people talk about the datasets they’ve created as opposed to the apps or visualizations they’ve built).

We know about this first hand. When the UK government first published its 25k spending data last Autumn we worked hard as part of our Where Does My Money Go? project to process and load the data so it was searchable and visualizable. Along the way we found data ‘bugs’ of the kind that are typical in this area — dates presented as 47653 (time since epoch), dates presented in inconsistent styles (US format versus UK format), occasional typos in names of departments or other entities etc.

We did our best to correct these as part of the load. However, it’s doubtful any of the issues we found got fixed (and certainly not as a result of our work) and we also didn’t do much to share with other datawranglers who were working on the data.

Why was this?

First, there is no mechanism to feed back to the publishers (we did notify data.gov.uk of some of the issues we found but it is very hard for them to act on this — the precise ‘publisher’ within a department may be hard to identify and may even be a machine (if the data is automatically produced in some way).

Second, there is no easy format in which to share fixes. Our cleaning code was public on the web but a bunch of python if statements is not the best ‘patch’ format for data. In a perfect world we’d have a patch format for data (or even just for csv’s) — and one that was algorithmic not line-based (so one could specific in one statement column X is wrongly formatted in this way rather than have 10k line changes); that was easily reviewable (we’re patching government spending data here!); and automatically apply-able (in short a patch format with tool support).

- I’m inevitably simplifying here. For example, there is of course some direct consumption by end users. There is some sharing between systems etc. ↩

- I discussed some of the work around data revisioning in this previous post We Need Distributed Revision/Version Control for Data. ↩

Rufus Pollock is Founder and President of Open Knowledge.

Governments should pay for automated validation services to check the formatting of the data they publish.

Here’s a csv validation service I’ve written to check the UK government spending data: http://validator.govtrace.com/ – about 45% of the files linked to from data.gov.uk are badly formatted – it’ll be interesting to see how the government responds to this feedback.

@delineator (Rob): that’s really interesting (and useful) :)

In this case I know that the (UK) gov are aware of the need to do validation on that material and now have an alpha QA framework in place which is about to be switched on properly.

What’s interesting though, is that would still be fixing at source — not a data cycle. Finding a way to incorporate your kind of feedback would be …

Great write up of the problem Rufus. Could we start by adding a ‘Send Feedback’ feature for each Resources in a CKAN Dataset which could add a public Resource Annotation and send an email to the Author email address, if provided?

A good post to see. I’m just about to do a small further bit of work around ways to record and capture information on the chains of data re-use (and to make it easy for people to share at least meta-data (i.e. provenance info) on how they have used data) which could act as a starting point for the more technical issues around data management and distributed revision control. Building on this: http://www.practicalparticipation.co.uk/odi/2010/08/open-data-workflows/ and hoping to get started in the next few weeks. Would be good to talk about how it might fit in with other open data eco-system components like CKAN.

You identify well the language shift needed from always talking about apps, to talking about the whole range of ways people use data, and to value all those diverse uses of data.

This draft paper might also be of interest around importance of opening up the data cleaning, source code etc stages: http://www.practicalparticipation.co.uk/odi/2011/02/what-supports-the-sustainable-re-use-of-open-data/

For many many years, mySociety have had an automated correction loop for local councillor data on WriteToThem, that I don’t think we’ve ever written up.

There’s a link “Have you spotted a mistake in the above list?” below your list of councillors after you enter your postcode. The data that captures gets automatically emailed to our data provider (GovEval) in a nice report once a week. They then manually check the corrections are true, and update their source, which we then later automatically update WriteToThem with.

In this case it is closed data. GovEval sell their database of councillors for money to other users, but mySociety are effectively paying for it with corrections. From our point of view it feels like GovEval are a human web service (such as Mechanical Turk) which validates new data for us.

Similar automatic loops could exist for open data – perhaps it could be used as an argument to get Government to open up data. “If you open it up, then we’ll get many eyeballs to send you corrections, which will benefit you”.

I agree Francis – the crowdsourcing data quality proposition is one we need to be able to deliver on with effective feedback loops to dataset authors.

Or indeed, following Nigel’s post, an argument for businesses to open up their data.

In cases where any data isn’t the unique value of the business, and they would benefit from others helping maintain the quality of it, getting fixes is a good reason to make the effort of publishing it.

This is all good stuff.

I think you might be overestimating the importance of an algorithmic diff. No source tools I am aware of actually parse the syntax tree when diffing. Nevertheless, the combination of a commit message (what was done) and a line based diff (where it was done) are very useful.

Mapping this approach to data, you should log ‘fixed date column with fix_date.py’ and just accept that a diff will show you every change.

I agree this does point to the need for a DVCS for data so that we can trace the original source and patch authors.

Great post, Rufus. Is it fair to say that it is often the quality of what you label automated integration (merge) tools that dictates the success of other crowd-sourced feedback loops (eg FLOSS)?

Good post Rufus, for open data to become sustainable then there has to be a feedback mechanism so data increases in value for both providers and users.

With DataGM – which is based on CKAN – we are trying to create a datastore that will be as valuable to public data providers as well as third parties. With 600 public employees in Greater Manchester trying to locate the data that they need to undertake their job everyday, there is a compelling case for local authorities to do this and as the value of a central catalogue is proven the idea of automated release becomes a distinct possibility.

Even though the datastore has only recently come online we are already seeing cleaned data uploaded by third parties. One such dataset to do with transport pointed out 164 errors in the original dataset. In an ideal world you would hope that there would now be a conversation between the data provider and the person who identified the errors. This will become evident over the coming months. We have also had a local newspaper upload a dataset that is relevant to the recently released police crime stats.

All this is fantastic but there are a number of potential problems that need to be looked at. There is a concern if multiple versions of the same dataset being in existence. This wouldn’t be too much of a problem if the dataset was corrected at source in a timely manner.

As a datastore how do we accommodate these value added datasets. DataGM is a repository of raw data does that mean that it is just that or should it be a catalogue of where value added data could be found. If the later then a process would need to be developed to accommodate these probably including a changelog.

It would also be problematic if the value added dataset fell foul of the Data Protection Act either in itself or by allowing other data to be ‘jigsawed’ – two or more anonymised datasets revealing the identity of an individual.

Rufus

Important post. I think I’d make the boundaries fuzzier than this. As Emer Coleman at the GLA says, the data is the least important part of open data. The key thing is that it allows a freer flow of data between institutions, and between institutions and people leading to a blurring of lines between the government and the community.

At the moment the only blurring is between monolithic government institutions and monolithic ‘trusted’ outsourcing corporate ‘partners’, leading to the sort of crazy position Francis detailed with GovEval where the communities does data cleansing for it and in doing so strengthens its entrenched position.

A final point is to avoid the community becoming monolithic itself, and I think this will happen if the we aren’t careful.

@David: I like the idea of send feedback extension. The big issue is: who’s listening when you send feedback and what can they do?

@Francis: really like the GovEval story — even if closed data! I think it is also a good reminder to to do the simplest thing first — we can get a long way with “sellotape and bits of string”, something I think James Casbon is also arguing for.

@Julian: I am delighted to hear of these great examples of corrected data already finding their way back into the system. The key point you make is that it is not enough just to get the data submitted back, we also need to make sure it is incorporated back into the original.

You also raise the interesting issue of multiplying (and possibly diverging) versions of the same data (what I would term ‘forking’).

Again it’s interesting to look at the software world where, especially with distributed version control systems like git and mercurial, ‘forking’ of codebases is ubiquitous. With the right tools, in particular prominent signposting of alternative versions of data and their inter-relation and relative up-to-date-ness, I don’t think forking is a big problem.

@Chris: point well-taken. I guess MySociety felt some data was better than no data (I assume the data cleaning was part of a quid pro quo with GovEval).

I think your point about diversity is good one — ecosystem’s aren’t a monoculture (though I note that all rich ecosystems also have parasites!).

Great idea. Here’s why it won’t get funded. Conservative voters in the U.S. comprise a large set of people with a high-school diploma. They are easily swayed by a smooth talker, don’t trust educated people, and are told (ad infinitum by FAUX News) that universities are run by bleeding-heart liberals who want to take all their money and give it to deadbeat drug users in the inner city (that’s code for ‘people with dark skin’). The result is that any project that seeks to improve and spread knowledge will fail to get government funding. The latest incarnation is the Tea Party, started by the richest of the energy barons (Koch brothers). Since the Tea Party seeks to disassemble the government they don’t want (which would suit the Koch brothers just fine) I believe they should stop using public roads to get to their meetings.

At Recollect – the civic garbage reminder service – we’ve done this cleaning and normalizing process on many collection datasets across Canadian Municipalities.

When we load a city into Recollect, we go through their existing web resources (be it .kml, .shp, .pdf, .jpg, …) and sometimes find bugs or problems with their calendars. Other times, we take “artists rendition” of the garbage collection zones and turn those in to real, downloadable .kml maps.

We also make it a first class goal to expose all the data we’ve loaded into Recollect as an API so that citizens in those cities can build new services and prototypes on top of Recollect. Because our API is standard across all the cities we support, innovations from citizens in a big town can be useful for citizens in a small municipality.

Good post. It appears we stumbled across the term “data ecosystem” at the same time. I just published a paper discussing the concept, especially those data wranglers or infomediaries. The paper is in press but you can find a preprint at http://dl.dropbox.com/u/546900/JIS-1391-v6.pdf.